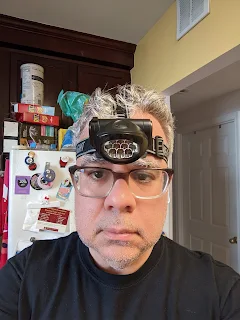

This is how I feel about measuring effort rather than results. Effort is where the light is. You have time estimates from planning and you have time spent on a burndown chart. You have lines of code and number of commits. You have green tests and passing builds.You have loads of data that show what effort was made and how long it took. All of that is really easy to measure and that might be why so much effort goes into measuring the easy to measure thing.

Here's the thing. All data would still be there if the only reason why you did the thing was that it was cool and you (or the product owner) wanted to and the feature provided no benefit to the stakeholders.

This is why I am making an effort to write stories that specifically state expected measurable results as part of the acceptance criteria, beyond just normal "it works and does a thing" as a way to increase focus on the value of outcomes of the work rather than just the effort of completing that task in the current sprint.

What I am aiming for are criteria, developed with the product owners; that would show that the work was not only completed, but that the work did a thing that that we wanted to get done in a way that makes sense for the stakeholders. Adding a feature that no one uses, no one wanted or that made some other aspect of the application worse, might be a successful completion of a sprint task. It might even be what the customer wanted. It just isn't a good outcome.

Old Style:

Story: As a user, it should be possible to add a new payment type to an existing account.Acceptance: User account with n+1 payment types.

In this case, you have a what not a how, that can be tested in a standard given, when, expect format test. This is very typical and in many cases might be the right approach.

New Style:

Story: As a user, it should be possible to add a new payment type to an existing account.Acceptance: User account with n+1 payment types.

Expected results: The number of support tickets related to payment type issues should decline. The number of users with multiple payment types should increase. User retention should increase or stay the same. Users looking at the help screens for payment types or while adding a payment type should decline.

In this case, you also have a what and a set of why criteria that should be true if an only if the change added value in the way the product owner expected based on their view of the users needs and wants.

But what if you're wrong? Then the effort might not have been worth it. Maybe the users didn't want that functionality. Maybe it works just fine but isn't discoverable and they don't know its there. Why the thing that was done didn't result in the thing you wanted might be disappointing, but it is a valuable insight that you only get from putting that bet on the table.

Old Style:

Story: Improve mobile page load speed while keeping the look and feel.Acceptance: Page is loaded, responsive and feature complete in X seconds.

Again, perfectly cromulent criteria that focuses on a what and not a how and can be tested in a standard given, when, expect format test.

New Style:

Story: Improve mobile page load speed while keeping the look and feel.Acceptance: While keeping the style and branding of the pages consistent, the page should be loaded, responsive and feature complete in X seconds.

Expected results: The number of users that timeout on subsequent page loads should decrease. The median page load time should decrease in proportion. The number of users who bounce while waiting for a page load should decrease.

In this case you are specifying why you think improving mobile page load speed is worth the effort and what you think the results of making the page faster should be. If you reach the load time goal and do not see the expected benefits, you have to go back and reconsider what would make the page seem fast or if page load time is what the users are unhappy with. That's disappointing, but would you know this without making this part of the story?

What if you can't think of measurable outcomes?

Are you sure there is a reason why you are doing this outside of "it's cool and I want to" ?What if I'm wrong?

Failing faster is better. There is no bigger waste than adding, optimizing or improving an unnecessary or unwanted feature. Anything done to prevent this would be a good thing, even being wrong often.Wouldn't it take a while to show that the results are there?

In most cases I'm interested in, yes. I expect the features to be A/B tested to see if they yield the expected results. If they don't, I would consider recommending rejecting or canceling the change and reevaluating the goal.

Another potential advantage here is that the measurable acceptance criteria can be continually tracked in future sprints, either by automated testing of by gathering analytical data from the running application. In cases where a future change breaks a prior positive expected result, it can be properly tracked as a regression error that undid some prior useful work.

A drop in the use of a feature following addition of new functionality might be evidence of changing use cases or evidence that the feature was made less useful. This approach may find cases where some functionality has been "broken" by a subsequent change even when all unit and integration tests continue to pass.